Speech Recognition: Difference between revisions

imported>Sean Plankey |

mNo edit summary |

||

| (43 intermediate revisions by 11 users not shown) | |||

| Line 1: | Line 1: | ||

{{subpages}} | <noinclude>{{subpages}}</noinclude> | ||

{{ | {{TOC|right}}<onlyinclude> | ||

In computer technology, '''[[Speech Recognition]]''' refers to the recognition of [[spoken language|human speech]] by computers for the performance of speaker-initiated computer-generated functions (e.g., transcribing speech to text; data entry; operating electronic and mechanical devices; automated processing of telephone calls) — a main element of so-called [[natural language]] processing through computer speech technology. | |||

Speech derives from sounds created by the [[human]] [[articulatory phonetics|articulatory]] system, including the [[lung]]s, [[vocal cord]]s, and [[tongue]]. Through exposure to variations in speech patterns during infancy, a child learns to recognize the same words or phrases despite different modes of pronunciation by different people— e.g., pronunciation differing in pitch, tone, emphasis, intonation pattern. The cognitive ability of the [[brain]] enables humans to achieve that remarkable capability. As of this writing (2008), we can reproduce that capability in computers only to a limited degree, but in many ways still useful. | |||

<noinclude>== The Challenge of Speech Recognition ==</noinclude> | |||

[[Image:Waveform_I_went_to_the_store_yesterday.jpg|thumb|Waveform of "I went to the store yesterday."]] [[Image:Spectrogram_I_went_to_the_store_yesterday.jpg|thumb|Spectrogram of "I went to the store yesterday."]] [[Writing system]]s are ancient, going back as far as the [[Sumerians]] of 6,000 years ago. The [[phonograph]], which allowed the analog recording and playback of speech, dates to 1877. Speech recognition had to await the development of computer, however, due to multifarious problems with the recognition of speech. | |||

First, speech is not simply spoken text--in the same way that Miles Davis playing ''So What'' can hardly be captured by a note-for-note rendition as sheet [[music]]. What humans understand as discrete [[word]]s, [[phrase]]s or [[sentence (linguistics)|sentence]]s with clear boundaries are actually delivered as a continuous stream of sounds: ''Iwenttothestoreyesterday'', rather than ''I went to the store yesterday''. Words can also blend, with ''Whaddayawa?'' representing ''What do you want?'' | |||

Second, there is no one-to-one correlation between the sounds and [[letter (alphabet)|letters]]. In [[English language|English]], there are slightly more than five [[vowel]] letters--''a'', ''e'', ''i'', ''o'', ''u'', and sometimes ''y'' and ''w''. There are more than twenty different vowel sounds, though, and the exact count can vary depending on the accent of the speaker. The reverse problem also occurs, where more than one letter can represent a given sound. The letter ''c'' can have the same sound as the letter ''k'', as in ''cake'', or as the letter ''s'', as in ''citrus''. | |||

In addition, people who speak the same language do not use the same sounds, i.e. languages vary in their [[phonology]], or patterns of sound organization. There are different [[accent (linguistics|accents]]--the word 'water' could be pronounced ''watter'', ''wadder'', ''woader'', ''wattah'', and so on. Each person has a distinctive [[pitch (linguistics)|pitch]] when they speak--men typically having the lowest pitch, women and children have a higher pitch (though there is wide variation and overlap within each group.) [[Pronunciation]] is also colored by adjacent sounds, the speed at which the user is talking, and even by the user's health. Consider how pronunciation changes when a person has a cold. | |||

Lastly, consider that not all sounds consist of meaningful speech. Regular speech is filled with interjections that do not have meaning in themselves, but serve to break up [[discourse]] and convey subtle information about the speaker's feelings or intentions: ''Oh'', ''like'', ''you know'', ''well''. There are also sounds that are a part of speech that are not considered words: ''er'', ''um'', ''uh''. Coughing, sneezing, laughing, sobbing, and even hiccupping can be a part of what is spoken. And the environment adds its own noises; speech recognition is difficult even for humans in noisy places. </onlyinclude> | |||

Lastly, consider that not all sounds | |||

== History of Speech Recognition == | |||

Despite the manifold difficulties, speech recognition has been attempted for almost as long as there have been digital computers. As early as 1952, researchers at Bell Labs had developed an Automatic Digit Recognizer, or "Audrey". Audrey attained an accuracy of 97 to 99 percent if the speaker was male, and if the speaker paused 350 milliseconds between words, and if the speaker limited his vocabulary to the digits from one to nine, plus "oh", and if the machine could be adjusted to the speaker's speech profile. Results dipped as low as 60 percent if the recognizer was not adjusted.<ref> K.H. Davis, R. Biddulph, S. Balashek: Automatic recognition of spoken digits. Journal of the Acoustical Society of America. '''24''', 637-642 (1952)</ref> | Despite the manifold difficulties, speech recognition has been attempted for almost as long as there have been digital computers. As early as 1952, researchers at Bell Labs had developed an Automatic Digit Recognizer, or "Audrey". Audrey attained an accuracy of 97 to 99 percent if the speaker was male, and if the speaker paused 350 milliseconds between words, and if the speaker limited his vocabulary to the digits from one to nine, plus "oh", and if the machine could be adjusted to the speaker's speech profile. Results dipped as low as 60 percent if the recognizer was not adjusted.<ref> K.H. Davis, R. Biddulph, S. Balashek: Automatic recognition of spoken digits. Journal of the Acoustical Society of America. '''24''', 637-642 (1952)</ref> | ||

Audrey worked by recognizing [[phoneme]]s, or individual sounds. The phonemes were correlated to reference models of phonemes that were generated by training the recognizer. Over the next two decades, researchers spent large amounts of time and money trying to improve upon this concept, with little success. Computer hardware improved by leaps and bounds, speech synthesis improved steadily, and [[Noam Chomsky]]'s idea of generative grammar suggested that language could be analyzed programmatically. None of this, however, seemed to improve the state of the art in speech recognition. | Audrey worked by recognizing [[phoneme]]s, or individual sounds that were considered distinct from each other. The phonemes were correlated to reference models of phonemes that were generated by training the recognizer. Over the next two decades, researchers spent large amounts of time and money trying to improve upon this concept, with little success. Computer hardware improved by leaps and bounds, speech synthesis improved steadily, and [[Noam Chomsky]]'s idea of [[generative grammar]] suggested that language could be analyzed programmatically. None of this, however, seemed to improve the state of the art in speech recognition. Chomsky and Halle's generative work in phonology also led mainstream linguistics to abandon the concept of the "phoneme" altogether, in favour of breaking down the sound patterns of language into smaller, more discrete "features".<ref>Chomsky, N. & M. Halle (1968) ''The Sound Pattern of English''. New York: Harper and Row.</ref> | ||

In 1969, [[John R. Pierce]] wrote a forthright letter to the Journal of the Acoustical Society of America, where much of the research on speech recognition was published. Pierce was one of the pioneers in satellite communications, and an executive vice president at Bell Labs, which was a leader in speech recognition research. Pierce said everyone involved was wasting time and money. | In 1969, [[John R. Pierce]] wrote a forthright letter to the Journal of the Acoustical Society of America, where much of the research on speech recognition was published. Pierce was one of the pioneers in satellite communications, and an executive vice president at Bell Labs, which was a leader in speech recognition research. Pierce said everyone involved was wasting time and money. | ||

| Line 35: | Line 35: | ||

In 2001, [[Microsoft]] released a speech recognition system that worked with Office XP. It neatly encapsulated how far the technology had come in fifty years, and what the limitations still were. The system had to be trained to a specific user's voice, using the works of great authors that were provided, such as Edgar Allen Poe's ''Fall of the House of Usher'', and Bill Gates' ''The Way Forward''. Even after training, the system was fragile enough that a warning was provided, "If you change the room in which you use Microsoft Speech Recognition and your accuracy drops, run the Microphone Wizard again." On the plus side, the system did work in real time, and it did recognize connected speech. | In 2001, [[Microsoft]] released a speech recognition system that worked with Office XP. It neatly encapsulated how far the technology had come in fifty years, and what the limitations still were. The system had to be trained to a specific user's voice, using the works of great authors that were provided, such as Edgar Allen Poe's ''Fall of the House of Usher'', and Bill Gates' ''The Way Forward''. Even after training, the system was fragile enough that a warning was provided, "If you change the room in which you use Microsoft Speech Recognition and your accuracy drops, run the Microphone Wizard again." On the plus side, the system did work in real time, and it did recognize connected speech. | ||

== Speech Recognition | == Speech Recognition as of 2009 == | ||

===Technology=== | ===Technology=== | ||

Current voice recognition technologies work on the ability to mathematically analyze the sound waves formed by our voices through resonance and spectrum analysis. Computer systems first record the sound waves spoken into a microphone through a digital to analog converter. The analog or continuous sound wave that we produce when we say a word is sliced up into small time fragments. | Current voice recognition technologies work on the ability to mathematically analyze the sound waves formed by our voices through resonance and spectrum analysis. Computer systems first record the sound waves spoken into a microphone through a digital to analog converter. The analog or continuous sound wave that we produce when we say a word is sliced up into small time fragments. These fragments are then measured based on their amplitude levels, the level of compression of air released from a person’s mouth. To measure the amplitudes and convert a sound wave to digital format the industry has commonly used the Nyquist-Shannon Theorem.<ref>Jurafsy, M. and Martin, J. An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition. New Jersey: Prentice Hall 2006</ref> | ||

'''Nyquist-Shannon Theorem'''<br /> | '''Nyquist-Shannon Theorem'''<br /> | ||

The Nyquist –Shannon theorem was developed in 1928 to show that a given analog frequency | The Nyquist –Shannon theorem was developed in 1928 to show that a given analog frequency is most accurately recreated by a digital frequency that is twice the original analog frequency. Nyquist proved this was true because an audible frequency must be sampled once for compression and once for rarefaction. For example, a 20 kHz audio signal can be accurately represented as a digital sample at 44.1 kHz.<br /> | ||

'''How it Works..'''<br /> | '''How it Works..'''<br /> | ||

Commonly speech recognition programs use statistical models to account for variations in dialect, accent, background noise, and pronunciation. These models have progressed to such an extent that in a quiet environment accuracy of over 90% can be achieved. While every company has their own proprietary technology for the way a spoken input is processed there exists 4 common themes about how speech is recognized. <br /> | Commonly speech recognition programs use statistical models to account for variations in dialect, accent, background noise, and pronunciation. These models have progressed to such an extent that in a quiet environment accuracy of over 90% can be achieved. While every company has their own proprietary technology for the way a spoken input is processed there exists 4 common themes about how speech is recognized. <br /> | ||

1. Template-Based: This model uses a database of speech patterns built into the program. After receiving voice input into the system recognition occurs by matching the input to the database. To do this the program uses Dynamic Programming algorithms. The downfall of this type of speech recognition is the inability for the recognition model to be flexible enough to understand voice patterns unlike those in the database. <br /> | *1. '''Template-Based''': This model uses a database of speech patterns built into the program. After receiving voice input into the system recognition occurs by matching the input to the database. To do this the program uses Dynamic Programming algorithms. The downfall of this type of speech recognition is the inability for the recognition model to be flexible enough to understand voice patterns unlike those in the database. <br /> | ||

2. Knowledge-Based: Knowledge-based speech recognition analyzes the spectrograms of the speech to gather data and create rules that return values equaling what commands or words the user said. Knowledge-Based recognition does not make use of linguistic or phonetic knowledge about speech. | *2. '''Knowledge-Based''': Knowledge-based speech recognition analyzes the spectrograms of the speech to gather data and create rules that return values equaling what commands or words the user said. Knowledge-Based recognition does not make use of linguistic or phonetic knowledge about speech. | ||

3. Stochastic: Stochastic speech recognition is the most common today. Stochastic methods of voice analysis make use of probability models to model the uncertainty of the spoken input. The most popular probability model is use of HMM (Hidden Markov Model) is shown below. <br /> | *3. '''Stochastic''': Stochastic speech recognition is the most common today. Stochastic methods of voice analysis make use of probability models to model the uncertainty of the spoken input. The most popular probability model is use of HMM (Hidden Markov Model) is shown below. <br /> | ||

[[Image:HMM Model Equation.gif|center|thumb|350px|{{#ifexist:Template:HMM Model Equation.gif/credit|{{HMM Model Equation.gif/credit}}<br/>|}}]] | |||

Yt is the observed acoustic data, p(W) is the a-priori probability of a particular word string, p(Yt|W) is the probability of the observed acoustic data given the acoustic models, and W is the hypothesised word string. | Yt is the observed acoustic data, p(W) is the a-priori probability of a particular word string, p(Yt|W) is the probability of the observed acoustic data given the acoustic models, and W is the hypothesised word string. | ||

When analyzing the spoken input the HMM has proven to be successful because the algorithm takes into account a language model, an acoustic model of how humans speak, and a lexicon of known words. | When analyzing the spoken input the HMM has proven to be successful because the algorithm takes into account a language model, an acoustic model of how humans speak, and a lexicon of known words. | ||

4. Connectionist: With Connectionist speech | *4. '''Connectionist''': With Connectionist speech recognition knowledge about a spoken input is gained by analyzing the input and storing it in a variety of ways from simple multi-layer perceptrons to time delay neural nets to recurrent neural nets. | ||

As stated above, programs that utilize stochastic models to | As stated above, programs that utilize stochastic models to analyze spoken language are most common today and have proven to be the most successful.<ref>Gales, Mark John Francis. Model Based Techniques for Noise Robust Speech Recognition. Gonville and Cauis College, September 1995.</ref> | ||

'''Recognizing Commands'''<br /> | '''Recognizing Commands'''<br /> | ||

The most important goal of current speech recognition software is to recognize commands. This increases the functionality of speech software. Software such as | The most important goal of current speech recognition software is to recognize commands. This increases the functionality of speech software. Software such as Microsoft Sync is built into many new vehicles, supposedly allowing users to access all of the car’s electronic accessories, hands-free. This software is adaptive. It asks the user a series of questions and utilizes the pronunciation of commonly used words to derive speech constants. These constants are then factored into the speech recognition algorithms, allowing the application to provide better recognition in the future. Current tech reviewers have said the technology is much improved from the early 1990’s but will not be replacing hand controls any time soon. <ref>http://etech.eweek.com/content/enterprise_applications/recognizing_speech_recognition.html </ref> | ||

'''Dictation'''<br /> | '''Dictation'''<br /> | ||

Second to command recognition is dictation. Today's market sees value in dictation software as discussed below in transcription of medical records, or papers for students, and as a more productive way to get one's thoughts down a written word. In addition many companies see value in dictation for the process of translation, in that users could have their words translated for written letters, or translated so the user could then say the word back to another party in their native language. Products of these types already exist in the market today. | Second to command recognition is dictation. Today's market sees value in dictation software as discussed below in transcription of medical records, or papers for students, and as a more productive way to get one's thoughts down a written word. In addition many companies see value in dictation for the process of translation, in that users could have their words translated for written letters, or translated so the user could then say the word back to another party in their native language. Products of these types already exist in the market today. | ||

'''Errors in Interpreting the Spoken Word'''<br /> | '''Errors in Interpreting the Spoken Word'''<br /> | ||

As speech recognition programs process your spoken words their success rate is based on their ability to minimize errors. The scale on which they can do this is called Single Word Error Rate (SWER) and Command Success Rate (CSR). A Single Word Error is simply put, a misunderstanding of one word in a spoken sentence. While SWERs can be found in Command Recognition Programs, they are most commonly found in dictation software. Command Success Rate is defined by an accurate interpretation of the spoken command. All words in | As speech recognition programs process your spoken words their success rate is based on their ability to minimize errors. The scale on which they can do this is called '''Single Word Error Rate''' (SWER) and '''Command Success Rate''' (CSR). A Single Word Error is simply put, a misunderstanding of one word in a spoken sentence. While SWERs can be found in Command Recognition Programs, they are most commonly found in dictation software. Command Success Rate is defined by an accurate interpretation of the spoken command. All words in a command statement may not be correctly interpreted, but the recognition program is able to use mathematical models to deduce the command the user wants to execute. | ||

===Business=== | ===Business=== | ||

==== Major Speech Technology Companies ==== | ==== Major Speech Technology Companies ==== | ||

As the speech technology industry grows, | As the speech technology industry grows, more companies emerge into this field bring with them new products and ideas. Some of the leaders in voice recognition technologies (but by no means all of them) are listed below. | ||

*'''NICE Systems''' (NASDAQ: NICE and Tel Aviv: Nice), headquartered in [[Israel]] and founded in 1986, specialize in digital recording and archiving technologies. In 2007 they made $523 million in revenue in 2007. For more information visit http://www.nice.com. | *'''NICE Systems''' (NASDAQ: NICE and Tel Aviv: Nice), headquartered in [[Israel]] and founded in 1986, specialize in digital recording and archiving technologies. In 2007 they made $523 million in revenue in 2007. For more information visit http://www.nice.com. | ||

*'''Verint Systems Inc.('''OTC:VRNT), headquartered in Melville, New York and founded in 1994 self-define themselves as “A leading provider of actionable intelligence solutions for workforce optimization, IP video, communications interception, and public safety.”<ref> | *'''Verint Systems Inc.('''OTC:VRNT), headquartered in Melville, New York and founded in 1994 self-define themselves as “A leading provider of actionable intelligence solutions for workforce optimization, IP video, communications interception, and public safety.”<ref>"About Verint," ''Verint'', accessed August 8, 2008, <http://verint.com/corporate/index.cfm></ref> For more information visit http://verint.com. | ||

*'''Nuance''' (NASDAQ: NUAN) headquartered in Burlington, develops speech and image technologies for business and customer service uses. For more information visit http://www.nuance.com/. | *'''Nuance''' (NASDAQ: NUAN) headquartered in Burlington, develops speech and image technologies for business and customer service uses. For more information visit http://www.nuance.com/. | ||

| Line 85: | Line 85: | ||

==== Patent Infringement Lawsuits ==== | ==== Patent Infringement Lawsuits ==== | ||

Given the highly competitive nature of both business and technology, it is not surprising that there have been numerous patent infringement lawsuits brought by various speech companies. Each element involved in developing a speech recognition device can be claimed as a separate technology, and hence patented as such. Use of a technology, even if it is independently developed, that is patented by another company or individual is liable to monetary compensation and often results in injunctions preventing companies from henceforth using the technology. The politics and business of the speech industry are tightly tied to speech technology development, | Given the highly competitive nature of both business and technology, it is not surprising that there have been numerous patent infringement lawsuits brought by various speech companies. Each element involved in developing a speech recognition device can be claimed as a separate technology, and hence patented as such. Use of a technology, even if it is independently developed, that is patented by another company or individual is liable to monetary compensation and often results in injunctions preventing companies from henceforth using the technology. The politics and business of the speech industry are tightly tied to speech technology development, it is therefore important to recognize legal and political barriers that may impede further developments in this industry. Below are a number of descriptions of patent infringement lawsuits. It should be noted that there are currently many more such suits on the docket, and more being brought to court every day. | ||

'''NICE v. Verint'''<br /> | '''NICE v. Verint'''<br /> | ||

The technologies produced by both | The technologies produced by both NICE and Verint intricately involve speech recognition. Both companies are highly competitive in the field of call/contact centers and security intelligence. Since 2004 NICE and Verint have both filed multiple patent infringement lawsuits upon each other. They are discussed below. | ||

*January 2008: Patent infringement case brought by NICE against Verint had a deadlocked jury | *January 2008: Patent infringement case brought by NICE against Verint had a deadlocked jury. It is currently awaiting new trial. | ||

*May 19, 2008: It was announced that the US District Court for the Northern District of Georgia in favor of Verint in their infringement suit against Nice Systems, and further awarded Verint $3.3 million in damages.<ref> “Verint Wins Patent Lawsuit Against Nice Systems on Speech Analytics Technology,” Verint May 19, 2008 < http://www.swpp.org/pressrelease/2008/0519VerintSpeechAnalyticsPatentRelease.pdf></ref> The suit was over Verint’s patent No. 6,404,857 for a device which can monitor several telephone communications and “relates to emotion detection, word spotting, and talk-over detection in speech analytics solutions.”<ref>L. Klie, “Speech Fills the Docket,” Speech Technology, vol. 13, no. 6, July/August 2008</ref> | *May 19, 2008: It was announced that the US District Court for the Northern District of Georgia voted in favor of Verint in their infringement suit against Nice Systems, and further awarded Verint $3.3 million in damages.<ref> “Verint Wins Patent Lawsuit Against Nice Systems on Speech Analytics Technology,” Verint May 19, 2008 < http://www.swpp.org/pressrelease/2008/0519VerintSpeechAnalyticsPatentRelease.pdf></ref> The suit was over Verint’s patent No. 6,404,857 for a device which can monitor several telephone communications and “relates to emotion detection, word spotting, and talk-over detection in speech analytics solutions.”<ref>L. Klie, “Speech Fills the Docket,” Speech Technology, vol. 13, no. 6, July/August 2008</ref> | ||

*May 27, 2008: US District Court for the Northern District of Georgia found that the technology of Verint Systems Inc. did not infringe on the NICE patent No. 6,871,299. The technology in this patent deals with IP recording. | *May 27, 2008: US District Court for the Northern District of Georgia found that the technology of Verint Systems Inc. did not infringe on the NICE patent No. 6,871,299. The technology in this patent deals with IP recording.<ref>“Verint Prevails in Second Patent Litigation Case Against Nice in One Week” ''Business Wire'', May 27, 2008, accessed August 8, 2008 <http://news.moneycentral.msn.com/provider/providerarticle.aspx?feed=BW&date=20080527&id=8690055></ref> | ||

*August 2008: Verint is currently awaiting a trial date for the patent infringement case they brought against NICE that involves their patented screen-capture technology. Verint stands to receive over $30 million in damages and an injunction prohibiting NICE from developing or selling any products with infringed technology. | *August 2008: Verint is currently awaiting a trial date for the patent infringement case they brought against NICE that involves their patented screen-capture technology. Verint stands to receive over $30 million in damages and an injunction prohibiting NICE from developing or selling any products with infringed technology.<ref>L. Klie, “Speech Fills the Docket.”</ref> | ||

And these lawsuits between NICE and Verint are not likely to end anytime soon, evident by Shlomo Shamir, NICE’s presidents, comment that “We believe that Verint’s patents asserted against NICE are invalid, and we intend to continue seeking invalidation of these patents.”<ref>L. Klie, “Speech Fills the Docket,” Speech Technology, vol. 13, no. 6, July/August 2008</ref> | And these lawsuits between NICE and Verint are not likely to end anytime soon, evident by Shlomo Shamir, NICE’s presidents, comment that “We believe that Verint’s patents asserted against NICE are invalid, and we intend to continue seeking invalidation of these patents.”<ref>L. Klie, “Speech Fills the Docket,” Speech Technology, vol. 13, no. 6, July/August 2008</ref> | ||

| Line 104: | Line 104: | ||

'''Klausner Technologies v. AT&T and Apple'''<br /> | '''Klausner Technologies v. AT&T and Apple'''<br /> | ||

June 16 2008: Klausner Technologies settled the infringement lawsuit they brought against AT&T and Apple over patents for Klausner’s visual voicemail. AT&T and Apple paid Klausner an undisclosed sum and agreed to license the technology from Klausner. | June 16 2008: Klausner Technologies settled the infringement lawsuit they brought against AT&T and Apple over patents for Klausner’s visual voicemail. AT&T and Apple paid Klausner an undisclosed sum and agreed to license the technology from Klausner.<ref>L. Klie, “Speech Fills the Docket.”</ref> | ||

==== Voice User Interface ==== | ==== Voice User Interface ==== | ||

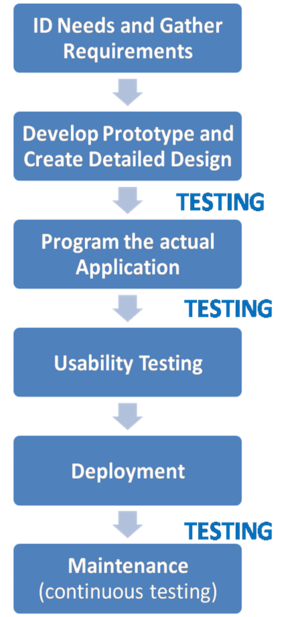

The design cycle of a '''voice user interface''' (VUI) parallels that of the '''[[graphical user interface]]''' (GUI), both following the standard '''[[software development life cycle]]''' (SDLC). Although SDLC varies slightly based on the developer and the company implementing it, it is generally agreed upon as (1) identification of needs (2) gathering of requirements (3) prototyping (4) detailed design (2) programming (3) product usability testing (4) deployment (5) maintenance.<ref>B. Scholz, “Building Speech Applications: Part One – The Application and its Development Infrastructure,” ''Speech Technology'', March/April 2004, accessed 8/9/2008, < http://www.speechtechmag.com/Articles/Editorial/Feature/Building-Speech-Applications-Part-One---The-Application-and-its-Development-Infrastructure-29437.aspx> | |||

</ref><ref>“SDLC Resources,” ''MKS'', accessed 8/17/2008, <http://www.mks.com/sdlc></ref> | |||

The VUI design cycle must however incorporate input from a much more diverse group of specialist than the UI cycle needs, including such people as speech scientists, linguists, human factors experts and business experts. Please note that in the model of the SDLC provided to the right, Steps 1 and 2 are combined and Steps 3 and 4 are combined. | |||

[[Image:Picture1.png|thumb|"The Software Development Design Cycle" ]] | |||

[[Image:Picture1.png|thumb|"The Software Development | |||

Once a business has adequately articulated their needs to software developers (step 1 of SDLC), developers must learn about their user (step 2 of SDLC). To do this, designers identify [[personas]], model users who encompass the characteristics, personalities and skill levels of potential users. Designing VUIs based on personas helps to ensure that speech prompts are appropriate and understandable to users in addition to helping determine proper dialog style. Dialog style defines the way a user can respond to a speech application. The four main types of dialog styles include (1) '''directed dialog''' where the user is given a number of choices and must respond with one of those options (2) '''natural language''' dialog where the user is given free range to say what they would like (3) '''mixed initiative''' applications which use a more confined type of natural language and defer to directed dialog if the user is not understood and (4) '''Form Filling''' in which the user answers a series of straight forward questions as they would if they were filling out a form.<ref>B. Scholz, “Building Speech Applications: Part One – The Application and its Development Infrastructure.”</ref> | |||

Designers also must carefully decide how to create prompts within the VUI. The two main mechanisms are (1) '''text-to-speech''' (TTS) and (2) '''audio recordings'''. Typical tools break prompts down into words and phrases which can then be combined during application runtime. The algorithms used within the speech application determine the word and phrase combination.<ref>B. Scholz, “Building Speech Applications: Part One – The Application and its Development Infrastructure.”</ref> | |||

Two main forms of speech recognition that have been used in the past are (1) '''constrained grammars''' which contain a large bank of word that the application can identify and (2) '''scripted interactions''' which take you through a step by step process where each step moves to a word bank more specific to the user's needs (ex: 411 call moving from “city and state” bank to “Philadelphia, Pennsylvania bank).<ref>{{citation | title = Revolutionizing Voice UI for Mobil | |||

| date = May 2008 | |||

| author = Vlingo | |||

| url = http://vlingo.com/pdf/Vlingo%20Voice%20Recognition%20White%20Paper%20-%20May%202008v2.pdf}}</ref> To reduce the constriction placed on users, many applications have implemented '''[[Statistical Language Models]]''' (SLMs) in which the large grammars used in the previous techniques are replaced by the statistical “averaging of a large corpus of sample responses recorded live from users responding to a prompt.”<ref>B. Scholz, “Building Speech Applications: Part One – The Application and its Development Infrastructure,”</ref> Essentially SLMs have the capability to estimate the probability or likehood that a word or phrase will occur in a given context. | |||

The building of VUIs involves extensive time and money. As a result a result the speech industry has answered back with a number of application templates. Companies such as ScanSoft, Vialto, Nuance and Unisys provide templates for such things as 411 directory assistance, voice-activated dialing, corporate greeter and a number of other automated call center applications. | |||

As is shown in the SDLC flow-chart to the right, testing occurs after multiple steps of the design process including after prototyping, initial development and product completion. This continual implementation of testing, namely '''[[usability testing]]''', makes SDLC an iterative process in which designers test, revise and test again. Usability testing is crucial for developers to learn more about users, to discover short-comings within products and to learn how to make their products better. Two common VUI testing techniques are (1) '''[[Wizard of Oz testing]]''' (WOZ) and (2) '''prototype testing'''. WOZ is described as “a human tester plays the recorded prompts to a panel of around 100 people who are asked to navigate the prompts and complete a particular task.” <ref>R. Joe, “What’s the Use?” ''Speech Technology''. March 1, 2008, accessed August 17, 2008, < http://www.speechtechmag.com/Articles/Editorial/Feature/What's-the-Use-41059.aspx></ref>This technique focuses on the understandability and effectiveness of the VUI prompts. Other VUI usability testing techniques include '''statistical benchmarks''', '''Holistic Usability Measure''' developed by Juan Gilbert where clients distribute 100 points across characteristics the developer deems important (i.e. accuracy, understandability and efficiency), and Melanie Polkosky’s statistical measure known as '''factor analysis''' that incorporates user- goal orientation, speech characteristics, verbosity and customer service behavior. <ref>M. Polkosky, “What is Speech Usability Anyway?,” ''Speech Technology'', Nov. 7, 2005, accessed August 17, 2008, | |||

< http://www.speechtechmag.com/Articles/Editorial/Feature/What-Is-Speech-Usability-Anyway-29601.aspx> | < http://www.speechtechmag.com/Articles/Editorial/Feature/What-Is-Speech-Usability-Anyway-29601.aspx> | ||

</ref> | </ref> | ||

It is important to note that there are many ways to develop UIs and VUIs other than those described here, however the techniques above describe some of the most “common” methodologies involved in VUI design. | |||

== The Future of Speech Recognition == | == The Future of Speech Recognition == | ||

===Emerging Technologies=== | ===Emerging Technologies=== | ||

'''Mobile Search Applications''' | '''Mobile Search Applications''' | ||

In recent years there has been a steady movement towards the development of speech technologies to replace or enhance text input applications. We see this trend in products such as audio search engines, voicemail to text programs, dictation programs and desktop “say what you see” commands..<ref> Microsoft provides this feature on Vista operating systems. To learn more go to http://www.microsoft.com/enable/products/windowsvista/speech.aspx</ref> Recently both Yahoo! and Microsoft have launched voice-based mobile search applications. The concept behind Yahoo! oneSearch and Microsoft Tellme are very similar, it is their implementation and the speech technology used in their applications which differ. With both products users speak a “search word” into their mobile phone or PDA while holding down the green talk button, the request is sent to a server that analyzes the sound bit and then the results of search appear on the mobile device.<ref>S.H. Wildstrom, “LOOK MA, NO-HANDS SEARCH; Voice-based, mobile search from Microsoft and Yahoo! are imperfect but promising,” ''BuisnessWeek'', McGraw-Hill, Inc. vol. 4088, June 16, 2008.</ref> OneSearch is currently available for select Blackberry users in the US and can be downloaded from [http://m.yahoo.com/voice Yahoo!]. Tellme is available for Blackberry users in the US by download from [http://m.tellme.com Microsoft] and pre-installed on all Helio’s Mystos. | |||

Yahoo! has partnered with vlingo for the speech recognition feature of oneSearch. This voice technology allows users to say exactly what they want, they do not need to know or use special commands, speak slowly or overly articulately. Vlingo’s technology implements Adaptive Hierarchical Language Models (A-HLMs) which allows oneSearch to use regional details and user patters to adapt to the characteristics of its surroundings including word pronunciation, accents and acoustics.<ref>E. Keleher and B. Monaghan, “Vlingo Introduces Localized Voice-Recognition Support for Yahoo! oneSearch,” ''vlingo'', June 17, 2008, http://vlingo.com/pdf/vlingo%20Introduces%20Localized%20Voice-rec%20for%20Yahoo%20oneSearch%20FINAL.pdf, accessed: August 8, 2008.</ref> A-HLMs uses server side | Yahoo! has partnered with vlingo for the speech recognition feature of oneSearch. This voice technology allows users to say exactly what they want, they do not need to know or use special commands, speak slowly or overly articulately. Vlingo’s technology implements Adaptive Hierarchical Language Models (A-HLMs) which allows oneSearch to use regional details and user patters to adapt to the characteristics of its surroundings including word pronunciation, accents and acoustics.<ref>E. Keleher and B. Monaghan, “Vlingo Introduces Localized Voice-Recognition Support for Yahoo! oneSearch,” ''vlingo'', June 17, 2008, http://vlingo.com/pdf/vlingo%20Introduces%20Localized%20Voice-rec%20for%20Yahoo%20oneSearch%20FINAL.pdf, accessed: August 8, 2008.</ref> A-HLMs uses server side adaptation which means when it learns a new word or pronunciation from one user, it adapts the language models of all users. For example, if a user in Boston (presumably with a Boston accent) is using oneSearch, data generated on all users from the Boston area will help the application to better understand what this user is saying. More information on vlingo can be found at [http://www.vlingo.com vlingo.com]. | ||

Microsoft’s subsidiary Tellme took a different approach to the speech recognition element of their mobile search application. Users are instructed to say specific phrases such as “traffic,” “map” or the name of business. Tellme’s senior product manager David Mitby, explains why they chose to limit speech parameters: "[because] very solid smartphone users pick this up for the first time, it’s not clear what to do. It’s not intuitive media yet."<ref> R. Joe, “Multiple-Modality Disorder,” Speech Techology, vol 13 no. 5, June 2008.</ref> | Microsoft’s subsidiary Tellme took a different approach to the speech recognition element of their mobile search application. Users are instructed to say specific phrases such as “traffic,” “map” or the name of business. Tellme’s senior product manager David Mitby, explains why they chose to limit speech parameters: "[because] very solid smartphone users pick this up for the first time, it’s not clear what to do. It’s not intuitive media yet."<ref> R. Joe, “Multiple-Modality Disorder,” Speech Techology, vol 13 no. 5, June 2008.</ref> | ||

| Line 140: | Line 144: | ||

===Future Trends & Applications=== | ===Future Trends & Applications=== | ||

====The Medical Industry==== | |||

For years the medical industry has been touting electronic medical records (EMR). Unfortunately the industry has been slow to adopt EMRs and some companies are betting that the reason is because of data entry. There isn’t enough people to enter the multitude of current patient’s data into electronic format and because of that the paper record prevails. A company called Nuance (also featured in other areas here, and developer of the software called Dragon Dictate) is betting that they can find a market selling their voice recognition software to physicians who would rather speak patients data than handwrite all medical information into a person’s file. <ref> http://www.1450.com/speech_enable_emr.pdf </ref><br /> | For years the medical industry has been touting '''electronic medical records''' (EMR). Unfortunately the industry has been slow to adopt EMRs and some companies are betting that the reason is because of data entry. There isn’t enough people to enter the multitude of current patient’s data into electronic format and because of that the paper record prevails. A company called Nuance (also featured in other areas here, and developer of the software called Dragon Dictate) is betting that they can find a market selling their voice recognition software to physicians who would rather speak patients' data than handwrite all medical information into a person’s file. <ref> http://www.1450.com/speech_enable_emr.pdf </ref><br /> | ||

====The Military==== | |||

The Defense industry has researched voice recognition software in an attempt to make complex user intense applications more efficient and friendly. Currently voice recognition is being experimented with cockpit displays in aircraft under the context that the pilot could access needed data faster and easier. <br /> | The Defense industry has researched voice recognition software in an attempt to make complex user intense applications more efficient and friendly. Currently voice recognition is being experimented with cockpit displays in aircraft under the context that the pilot could access needed data faster and easier. <br /> | ||

Command Centers are also looking to use voice recognition technology to search and access the vast amounts of database data under their control in a quick and concise manner during situations of crisis. In addition the military has also jumped onboard with EMR for patient care. The military has voiced its commitment to utilizing voice recognition software in transmitting data into patients' records. <ref>ftp://ftp.scansoft.com/nuance/news/AuntMinnieMilitaryArticle.pdf</ref> | Command Centers are also looking to use voice recognition technology to search and access the vast amounts of database data under their control in a quick and concise manner during situations of crisis. In addition the military has also jumped onboard with EMR for patient care. The military has voiced its commitment to utilizing voice recognition software in transmitting data into patients' records. <ref>ftp://ftp.scansoft.com/nuance/news/AuntMinnieMilitaryArticle.pdf</ref> | ||

==References== | ==References== | ||

{{reflist|2}}[[Category:Suggestion Bot Tag]] | |||

[[Category: | |||

Latest revision as of 06:00, 21 October 2024

In computer technology, Speech Recognition refers to the recognition of human speech by computers for the performance of speaker-initiated computer-generated functions (e.g., transcribing speech to text; data entry; operating electronic and mechanical devices; automated processing of telephone calls) — a main element of so-called natural language processing through computer speech technology.

Speech derives from sounds created by the human articulatory system, including the lungs, vocal cords, and tongue. Through exposure to variations in speech patterns during infancy, a child learns to recognize the same words or phrases despite different modes of pronunciation by different people— e.g., pronunciation differing in pitch, tone, emphasis, intonation pattern. The cognitive ability of the brain enables humans to achieve that remarkable capability. As of this writing (2008), we can reproduce that capability in computers only to a limited degree, but in many ways still useful.

The Challenge of Speech Recognition

Writing systems are ancient, going back as far as the Sumerians of 6,000 years ago. The phonograph, which allowed the analog recording and playback of speech, dates to 1877. Speech recognition had to await the development of computer, however, due to multifarious problems with the recognition of speech.

First, speech is not simply spoken text--in the same way that Miles Davis playing So What can hardly be captured by a note-for-note rendition as sheet music. What humans understand as discrete words, phrases or sentences with clear boundaries are actually delivered as a continuous stream of sounds: Iwenttothestoreyesterday, rather than I went to the store yesterday. Words can also blend, with Whaddayawa? representing What do you want?

Second, there is no one-to-one correlation between the sounds and letters. In English, there are slightly more than five vowel letters--a, e, i, o, u, and sometimes y and w. There are more than twenty different vowel sounds, though, and the exact count can vary depending on the accent of the speaker. The reverse problem also occurs, where more than one letter can represent a given sound. The letter c can have the same sound as the letter k, as in cake, or as the letter s, as in citrus.

In addition, people who speak the same language do not use the same sounds, i.e. languages vary in their phonology, or patterns of sound organization. There are different accents--the word 'water' could be pronounced watter, wadder, woader, wattah, and so on. Each person has a distinctive pitch when they speak--men typically having the lowest pitch, women and children have a higher pitch (though there is wide variation and overlap within each group.) Pronunciation is also colored by adjacent sounds, the speed at which the user is talking, and even by the user's health. Consider how pronunciation changes when a person has a cold.

Lastly, consider that not all sounds consist of meaningful speech. Regular speech is filled with interjections that do not have meaning in themselves, but serve to break up discourse and convey subtle information about the speaker's feelings or intentions: Oh, like, you know, well. There are also sounds that are a part of speech that are not considered words: er, um, uh. Coughing, sneezing, laughing, sobbing, and even hiccupping can be a part of what is spoken. And the environment adds its own noises; speech recognition is difficult even for humans in noisy places.

History of Speech Recognition

Despite the manifold difficulties, speech recognition has been attempted for almost as long as there have been digital computers. As early as 1952, researchers at Bell Labs had developed an Automatic Digit Recognizer, or "Audrey". Audrey attained an accuracy of 97 to 99 percent if the speaker was male, and if the speaker paused 350 milliseconds between words, and if the speaker limited his vocabulary to the digits from one to nine, plus "oh", and if the machine could be adjusted to the speaker's speech profile. Results dipped as low as 60 percent if the recognizer was not adjusted.[1]

Audrey worked by recognizing phonemes, or individual sounds that were considered distinct from each other. The phonemes were correlated to reference models of phonemes that were generated by training the recognizer. Over the next two decades, researchers spent large amounts of time and money trying to improve upon this concept, with little success. Computer hardware improved by leaps and bounds, speech synthesis improved steadily, and Noam Chomsky's idea of generative grammar suggested that language could be analyzed programmatically. None of this, however, seemed to improve the state of the art in speech recognition. Chomsky and Halle's generative work in phonology also led mainstream linguistics to abandon the concept of the "phoneme" altogether, in favour of breaking down the sound patterns of language into smaller, more discrete "features".[2]

In 1969, John R. Pierce wrote a forthright letter to the Journal of the Acoustical Society of America, where much of the research on speech recognition was published. Pierce was one of the pioneers in satellite communications, and an executive vice president at Bell Labs, which was a leader in speech recognition research. Pierce said everyone involved was wasting time and money.

It would be too simple to say that work in speech recognition is carried out simply because one can get money for it. . . .The attraction is perhaps similar to the attraction of schemes for turning water into gasoline, extracting gold from the sea, curing cancer, or going to the moon. One doesn't attract thoughtlessly given dollars by means of schemes for cutting the cost of soap by 10%. To sell suckers, one uses deceit and offers glamor.[3]

Pierce's 1969 letter marked the end of official research at Bell Labs for nearly a decade. The defense research agency ARPA, however, chose to persevere. In 1971 they sponsored a research initiative to develop a speech recognizer that could handle at least 1,000 words and understand connected speech, i.e., speech without clear pauses between each word. The recognizer could assume a low-background-noise environment, and it did not need to work in real time.

By 1976, three contractors had developed six systems. The most successful system, developed by Carnegie Mellon University, was called Harpy. Harpy was slow—a four-second sentence would have taken more than five minutes to process. It also still required speakers to 'train' it by speaking sentences to build up a reference model. Nonetheless, it did recognize a thousand-word vocabulary, and it did support connected speech.[4]

Research continued on several paths, but Harpy was the model for future success. It used hidden Markov models and statistical modeling to extract meaning from speech. In essence, speech was broken up into overlapping small chunks of sound, and probabilistic models inferred the most likely words or parts of words in each chunk, and then the same model was applied again to the aggregate of the overlapping chunks. The procedure is computationally intensive, but it has proven to be the most successful.

Throughout the 1970s and 1980s research continued. By the 1980s, most researchers were using hidden Markov models, which are behind all contemporary speech recognizers. In the latter part of the 1980s and in the 1990s, DARPA (the renamed ARPA) funded several initiatives. The first initiative was similar to the previous challenge: the requirement was still a one-thousand word vocabulary, but this time a rigorous performance standard was devised. This initiative produced systems that lowered the word error rate from ten percent to a few percent. Additional initiatives have focused on improving algorithms and improving computational efficiency.[5]

In 2001, Microsoft released a speech recognition system that worked with Office XP. It neatly encapsulated how far the technology had come in fifty years, and what the limitations still were. The system had to be trained to a specific user's voice, using the works of great authors that were provided, such as Edgar Allen Poe's Fall of the House of Usher, and Bill Gates' The Way Forward. Even after training, the system was fragile enough that a warning was provided, "If you change the room in which you use Microsoft Speech Recognition and your accuracy drops, run the Microphone Wizard again." On the plus side, the system did work in real time, and it did recognize connected speech.

Speech Recognition as of 2009

Technology

Current voice recognition technologies work on the ability to mathematically analyze the sound waves formed by our voices through resonance and spectrum analysis. Computer systems first record the sound waves spoken into a microphone through a digital to analog converter. The analog or continuous sound wave that we produce when we say a word is sliced up into small time fragments. These fragments are then measured based on their amplitude levels, the level of compression of air released from a person’s mouth. To measure the amplitudes and convert a sound wave to digital format the industry has commonly used the Nyquist-Shannon Theorem.[6]

Nyquist-Shannon Theorem

The Nyquist –Shannon theorem was developed in 1928 to show that a given analog frequency is most accurately recreated by a digital frequency that is twice the original analog frequency. Nyquist proved this was true because an audible frequency must be sampled once for compression and once for rarefaction. For example, a 20 kHz audio signal can be accurately represented as a digital sample at 44.1 kHz.

How it Works..

Commonly speech recognition programs use statistical models to account for variations in dialect, accent, background noise, and pronunciation. These models have progressed to such an extent that in a quiet environment accuracy of over 90% can be achieved. While every company has their own proprietary technology for the way a spoken input is processed there exists 4 common themes about how speech is recognized.

- 1. Template-Based: This model uses a database of speech patterns built into the program. After receiving voice input into the system recognition occurs by matching the input to the database. To do this the program uses Dynamic Programming algorithms. The downfall of this type of speech recognition is the inability for the recognition model to be flexible enough to understand voice patterns unlike those in the database.

- 2. Knowledge-Based: Knowledge-based speech recognition analyzes the spectrograms of the speech to gather data and create rules that return values equaling what commands or words the user said. Knowledge-Based recognition does not make use of linguistic or phonetic knowledge about speech.

- 3. Stochastic: Stochastic speech recognition is the most common today. Stochastic methods of voice analysis make use of probability models to model the uncertainty of the spoken input. The most popular probability model is use of HMM (Hidden Markov Model) is shown below.

Yt is the observed acoustic data, p(W) is the a-priori probability of a particular word string, p(Yt|W) is the probability of the observed acoustic data given the acoustic models, and W is the hypothesised word string.

When analyzing the spoken input the HMM has proven to be successful because the algorithm takes into account a language model, an acoustic model of how humans speak, and a lexicon of known words.

- 4. Connectionist: With Connectionist speech recognition knowledge about a spoken input is gained by analyzing the input and storing it in a variety of ways from simple multi-layer perceptrons to time delay neural nets to recurrent neural nets.

As stated above, programs that utilize stochastic models to analyze spoken language are most common today and have proven to be the most successful.[7]

Recognizing Commands

The most important goal of current speech recognition software is to recognize commands. This increases the functionality of speech software. Software such as Microsoft Sync is built into many new vehicles, supposedly allowing users to access all of the car’s electronic accessories, hands-free. This software is adaptive. It asks the user a series of questions and utilizes the pronunciation of commonly used words to derive speech constants. These constants are then factored into the speech recognition algorithms, allowing the application to provide better recognition in the future. Current tech reviewers have said the technology is much improved from the early 1990’s but will not be replacing hand controls any time soon. [8]

Dictation

Second to command recognition is dictation. Today's market sees value in dictation software as discussed below in transcription of medical records, or papers for students, and as a more productive way to get one's thoughts down a written word. In addition many companies see value in dictation for the process of translation, in that users could have their words translated for written letters, or translated so the user could then say the word back to another party in their native language. Products of these types already exist in the market today.

Errors in Interpreting the Spoken Word

As speech recognition programs process your spoken words their success rate is based on their ability to minimize errors. The scale on which they can do this is called Single Word Error Rate (SWER) and Command Success Rate (CSR). A Single Word Error is simply put, a misunderstanding of one word in a spoken sentence. While SWERs can be found in Command Recognition Programs, they are most commonly found in dictation software. Command Success Rate is defined by an accurate interpretation of the spoken command. All words in a command statement may not be correctly interpreted, but the recognition program is able to use mathematical models to deduce the command the user wants to execute.

Business

Major Speech Technology Companies

As the speech technology industry grows, more companies emerge into this field bring with them new products and ideas. Some of the leaders in voice recognition technologies (but by no means all of them) are listed below.

- NICE Systems (NASDAQ: NICE and Tel Aviv: Nice), headquartered in Israel and founded in 1986, specialize in digital recording and archiving technologies. In 2007 they made $523 million in revenue in 2007. For more information visit http://www.nice.com.

- Verint Systems Inc.(OTC:VRNT), headquartered in Melville, New York and founded in 1994 self-define themselves as “A leading provider of actionable intelligence solutions for workforce optimization, IP video, communications interception, and public safety.”[9] For more information visit http://verint.com.

- Nuance (NASDAQ: NUAN) headquartered in Burlington, develops speech and image technologies for business and customer service uses. For more information visit http://www.nuance.com/.

- Vlingo, headquartered in Cambridge, MA, develops speech recognition technology that interfaces with wireless/mobile technologies. Vlingo has recently teamed up with Yahoo! providing the speech recognition technology for Yahoo!’s mobile search service, oneSearch. For more information visit http://vlingo.com

Other major companies involved in Speech Technologies include: Unisys, ChaCha, SpeechCycle, Sensory, Microsoft's Tellme, Klausner Technologies and many more.

Patent Infringement Lawsuits

Given the highly competitive nature of both business and technology, it is not surprising that there have been numerous patent infringement lawsuits brought by various speech companies. Each element involved in developing a speech recognition device can be claimed as a separate technology, and hence patented as such. Use of a technology, even if it is independently developed, that is patented by another company or individual is liable to monetary compensation and often results in injunctions preventing companies from henceforth using the technology. The politics and business of the speech industry are tightly tied to speech technology development, it is therefore important to recognize legal and political barriers that may impede further developments in this industry. Below are a number of descriptions of patent infringement lawsuits. It should be noted that there are currently many more such suits on the docket, and more being brought to court every day.

NICE v. Verint

The technologies produced by both NICE and Verint intricately involve speech recognition. Both companies are highly competitive in the field of call/contact centers and security intelligence. Since 2004 NICE and Verint have both filed multiple patent infringement lawsuits upon each other. They are discussed below.

- January 2008: Patent infringement case brought by NICE against Verint had a deadlocked jury. It is currently awaiting new trial.

- May 19, 2008: It was announced that the US District Court for the Northern District of Georgia voted in favor of Verint in their infringement suit against Nice Systems, and further awarded Verint $3.3 million in damages.[10] The suit was over Verint’s patent No. 6,404,857 for a device which can monitor several telephone communications and “relates to emotion detection, word spotting, and talk-over detection in speech analytics solutions.”[11]

- May 27, 2008: US District Court for the Northern District of Georgia found that the technology of Verint Systems Inc. did not infringe on the NICE patent No. 6,871,299. The technology in this patent deals with IP recording.[12]

- August 2008: Verint is currently awaiting a trial date for the patent infringement case they brought against NICE that involves their patented screen-capture technology. Verint stands to receive over $30 million in damages and an injunction prohibiting NICE from developing or selling any products with infringed technology.[13]

And these lawsuits between NICE and Verint are not likely to end anytime soon, evident by Shlomo Shamir, NICE’s presidents, comment that “We believe that Verint’s patents asserted against NICE are invalid, and we intend to continue seeking invalidation of these patents.”[14]

Nuance v. vlingo

June 16, 2008: Nuance filed a claim in the United States District Court for the Eastern District of Texas against vlingo for patent infringement of Patent No. 6,766,295, “Adaptation of a Speech Recognition System across Multiple Remote Sessions with a Speaker”. The technology described in this patent is an “adaption of speech recognition system across multiple remote sessions with speaker (patent foot note),” which is essentially the result of storing multiple acoustical models for one speaker. If the suit is found in favor of Nuance, they stand to receive damages and an injunction against the use of their technologies. [15]

Klausner Technologies v. AT&T and Apple

June 16 2008: Klausner Technologies settled the infringement lawsuit they brought against AT&T and Apple over patents for Klausner’s visual voicemail. AT&T and Apple paid Klausner an undisclosed sum and agreed to license the technology from Klausner.[16]

Voice User Interface

The design cycle of a voice user interface (VUI) parallels that of the graphical user interface (GUI), both following the standard software development life cycle (SDLC). Although SDLC varies slightly based on the developer and the company implementing it, it is generally agreed upon as (1) identification of needs (2) gathering of requirements (3) prototyping (4) detailed design (2) programming (3) product usability testing (4) deployment (5) maintenance.[17][18] The VUI design cycle must however incorporate input from a much more diverse group of specialist than the UI cycle needs, including such people as speech scientists, linguists, human factors experts and business experts. Please note that in the model of the SDLC provided to the right, Steps 1 and 2 are combined and Steps 3 and 4 are combined.

Once a business has adequately articulated their needs to software developers (step 1 of SDLC), developers must learn about their user (step 2 of SDLC). To do this, designers identify personas, model users who encompass the characteristics, personalities and skill levels of potential users. Designing VUIs based on personas helps to ensure that speech prompts are appropriate and understandable to users in addition to helping determine proper dialog style. Dialog style defines the way a user can respond to a speech application. The four main types of dialog styles include (1) directed dialog where the user is given a number of choices and must respond with one of those options (2) natural language dialog where the user is given free range to say what they would like (3) mixed initiative applications which use a more confined type of natural language and defer to directed dialog if the user is not understood and (4) Form Filling in which the user answers a series of straight forward questions as they would if they were filling out a form.[19]

Designers also must carefully decide how to create prompts within the VUI. The two main mechanisms are (1) text-to-speech (TTS) and (2) audio recordings. Typical tools break prompts down into words and phrases which can then be combined during application runtime. The algorithms used within the speech application determine the word and phrase combination.[20]

Two main forms of speech recognition that have been used in the past are (1) constrained grammars which contain a large bank of word that the application can identify and (2) scripted interactions which take you through a step by step process where each step moves to a word bank more specific to the user's needs (ex: 411 call moving from “city and state” bank to “Philadelphia, Pennsylvania bank).[21] To reduce the constriction placed on users, many applications have implemented Statistical Language Models (SLMs) in which the large grammars used in the previous techniques are replaced by the statistical “averaging of a large corpus of sample responses recorded live from users responding to a prompt.”[22] Essentially SLMs have the capability to estimate the probability or likehood that a word or phrase will occur in a given context.

The building of VUIs involves extensive time and money. As a result a result the speech industry has answered back with a number of application templates. Companies such as ScanSoft, Vialto, Nuance and Unisys provide templates for such things as 411 directory assistance, voice-activated dialing, corporate greeter and a number of other automated call center applications.

As is shown in the SDLC flow-chart to the right, testing occurs after multiple steps of the design process including after prototyping, initial development and product completion. This continual implementation of testing, namely usability testing, makes SDLC an iterative process in which designers test, revise and test again. Usability testing is crucial for developers to learn more about users, to discover short-comings within products and to learn how to make their products better. Two common VUI testing techniques are (1) Wizard of Oz testing (WOZ) and (2) prototype testing. WOZ is described as “a human tester plays the recorded prompts to a panel of around 100 people who are asked to navigate the prompts and complete a particular task.” [23]This technique focuses on the understandability and effectiveness of the VUI prompts. Other VUI usability testing techniques include statistical benchmarks, Holistic Usability Measure developed by Juan Gilbert where clients distribute 100 points across characteristics the developer deems important (i.e. accuracy, understandability and efficiency), and Melanie Polkosky’s statistical measure known as factor analysis that incorporates user- goal orientation, speech characteristics, verbosity and customer service behavior. [24]

It is important to note that there are many ways to develop UIs and VUIs other than those described here, however the techniques above describe some of the most “common” methodologies involved in VUI design.

The Future of Speech Recognition

Emerging Technologies

Mobile Search Applications

In recent years there has been a steady movement towards the development of speech technologies to replace or enhance text input applications. We see this trend in products such as audio search engines, voicemail to text programs, dictation programs and desktop “say what you see” commands..[25] Recently both Yahoo! and Microsoft have launched voice-based mobile search applications. The concept behind Yahoo! oneSearch and Microsoft Tellme are very similar, it is their implementation and the speech technology used in their applications which differ. With both products users speak a “search word” into their mobile phone or PDA while holding down the green talk button, the request is sent to a server that analyzes the sound bit and then the results of search appear on the mobile device.[26] OneSearch is currently available for select Blackberry users in the US and can be downloaded from Yahoo!. Tellme is available for Blackberry users in the US by download from Microsoft and pre-installed on all Helio’s Mystos.

Yahoo! has partnered with vlingo for the speech recognition feature of oneSearch. This voice technology allows users to say exactly what they want, they do not need to know or use special commands, speak slowly or overly articulately. Vlingo’s technology implements Adaptive Hierarchical Language Models (A-HLMs) which allows oneSearch to use regional details and user patters to adapt to the characteristics of its surroundings including word pronunciation, accents and acoustics.[27] A-HLMs uses server side adaptation which means when it learns a new word or pronunciation from one user, it adapts the language models of all users. For example, if a user in Boston (presumably with a Boston accent) is using oneSearch, data generated on all users from the Boston area will help the application to better understand what this user is saying. More information on vlingo can be found at vlingo.com.

Microsoft’s subsidiary Tellme took a different approach to the speech recognition element of their mobile search application. Users are instructed to say specific phrases such as “traffic,” “map” or the name of business. Tellme’s senior product manager David Mitby, explains why they chose to limit speech parameters: "[because] very solid smartphone users pick this up for the first time, it’s not clear what to do. It’s not intuitive media yet."[28]

Other companies with mobile search applications include ChaCha, V-Enable and Go2.

Future Trends & Applications

The Medical Industry

For years the medical industry has been touting electronic medical records (EMR). Unfortunately the industry has been slow to adopt EMRs and some companies are betting that the reason is because of data entry. There isn’t enough people to enter the multitude of current patient’s data into electronic format and because of that the paper record prevails. A company called Nuance (also featured in other areas here, and developer of the software called Dragon Dictate) is betting that they can find a market selling their voice recognition software to physicians who would rather speak patients' data than handwrite all medical information into a person’s file. [29]

The Military

The Defense industry has researched voice recognition software in an attempt to make complex user intense applications more efficient and friendly. Currently voice recognition is being experimented with cockpit displays in aircraft under the context that the pilot could access needed data faster and easier.

Command Centers are also looking to use voice recognition technology to search and access the vast amounts of database data under their control in a quick and concise manner during situations of crisis. In addition the military has also jumped onboard with EMR for patient care. The military has voiced its commitment to utilizing voice recognition software in transmitting data into patients' records. [30]

References

- ↑ K.H. Davis, R. Biddulph, S. Balashek: Automatic recognition of spoken digits. Journal of the Acoustical Society of America. 24, 637-642 (1952)

- ↑ Chomsky, N. & M. Halle (1968) The Sound Pattern of English. New York: Harper and Row.

- ↑ J.R. Pierce: Whither Speech Recognition. Journal of the Acoustical Society of America. 46, 1049-1051 (1969)

- ↑ Robert D. Rodman. Computer Speech Technology. Massachusetts: Artech House 1999

- ↑ Pieraccini, R. and Lubensky, D.: Spoken Language Communication with Machines: The Long and Winding Road from Research to Business. In Innovations in Applied Artificial Intelligence/Lecture Notes in Artificial Intelligence 3533, pp. 6-15, M. Ali and F. Esposito (Eds.) 2005

- ↑ Jurafsy, M. and Martin, J. An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition. New Jersey: Prentice Hall 2006

- ↑ Gales, Mark John Francis. Model Based Techniques for Noise Robust Speech Recognition. Gonville and Cauis College, September 1995.

- ↑ http://etech.eweek.com/content/enterprise_applications/recognizing_speech_recognition.html

- ↑ "About Verint," Verint, accessed August 8, 2008, <http://verint.com/corporate/index.cfm>

- ↑ “Verint Wins Patent Lawsuit Against Nice Systems on Speech Analytics Technology,” Verint May 19, 2008 < http://www.swpp.org/pressrelease/2008/0519VerintSpeechAnalyticsPatentRelease.pdf>

- ↑ L. Klie, “Speech Fills the Docket,” Speech Technology, vol. 13, no. 6, July/August 2008

- ↑ “Verint Prevails in Second Patent Litigation Case Against Nice in One Week” Business Wire, May 27, 2008, accessed August 8, 2008 <http://news.moneycentral.msn.com/provider/providerarticle.aspx?feed=BW&date=20080527&id=8690055>

- ↑ L. Klie, “Speech Fills the Docket.”

- ↑ L. Klie, “Speech Fills the Docket,” Speech Technology, vol. 13, no. 6, July/August 2008

- ↑ “Nuance Asserts Intellectual Property in Speech Adaptation Domain, Files Patent Infringement,” Reuters, June 16, 2008 <http://www.reuters.com/article/pressRelease/idUS194736+16-Jun-2008+BW20080616>

- ↑ L. Klie, “Speech Fills the Docket.”

- ↑ B. Scholz, “Building Speech Applications: Part One – The Application and its Development Infrastructure,” Speech Technology, March/April 2004, accessed 8/9/2008, < http://www.speechtechmag.com/Articles/Editorial/Feature/Building-Speech-Applications-Part-One---The-Application-and-its-Development-Infrastructure-29437.aspx>

- ↑ “SDLC Resources,” MKS, accessed 8/17/2008, <http://www.mks.com/sdlc>

- ↑ B. Scholz, “Building Speech Applications: Part One – The Application and its Development Infrastructure.”

- ↑ B. Scholz, “Building Speech Applications: Part One – The Application and its Development Infrastructure.”

- ↑ Vlingo (May 2008), Revolutionizing Voice UI for Mobil

- ↑ B. Scholz, “Building Speech Applications: Part One – The Application and its Development Infrastructure,”

- ↑ R. Joe, “What’s the Use?” Speech Technology. March 1, 2008, accessed August 17, 2008, < http://www.speechtechmag.com/Articles/Editorial/Feature/What's-the-Use-41059.aspx>

- ↑ M. Polkosky, “What is Speech Usability Anyway?,” Speech Technology, Nov. 7, 2005, accessed August 17, 2008, < http://www.speechtechmag.com/Articles/Editorial/Feature/What-Is-Speech-Usability-Anyway-29601.aspx>

- ↑ Microsoft provides this feature on Vista operating systems. To learn more go to http://www.microsoft.com/enable/products/windowsvista/speech.aspx

- ↑ S.H. Wildstrom, “LOOK MA, NO-HANDS SEARCH; Voice-based, mobile search from Microsoft and Yahoo! are imperfect but promising,” BuisnessWeek, McGraw-Hill, Inc. vol. 4088, June 16, 2008.

- ↑ E. Keleher and B. Monaghan, “Vlingo Introduces Localized Voice-Recognition Support for Yahoo! oneSearch,” vlingo, June 17, 2008, http://vlingo.com/pdf/vlingo%20Introduces%20Localized%20Voice-rec%20for%20Yahoo%20oneSearch%20FINAL.pdf, accessed: August 8, 2008.

- ↑ R. Joe, “Multiple-Modality Disorder,” Speech Techology, vol 13 no. 5, June 2008.

- ↑ http://www.1450.com/speech_enable_emr.pdf

- ↑ ftp://ftp.scansoft.com/nuance/news/AuntMinnieMilitaryArticle.pdf